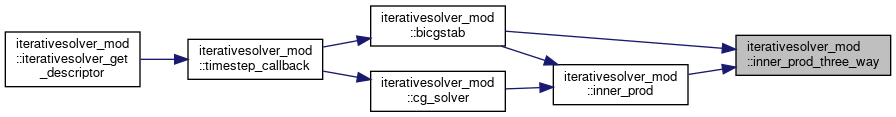

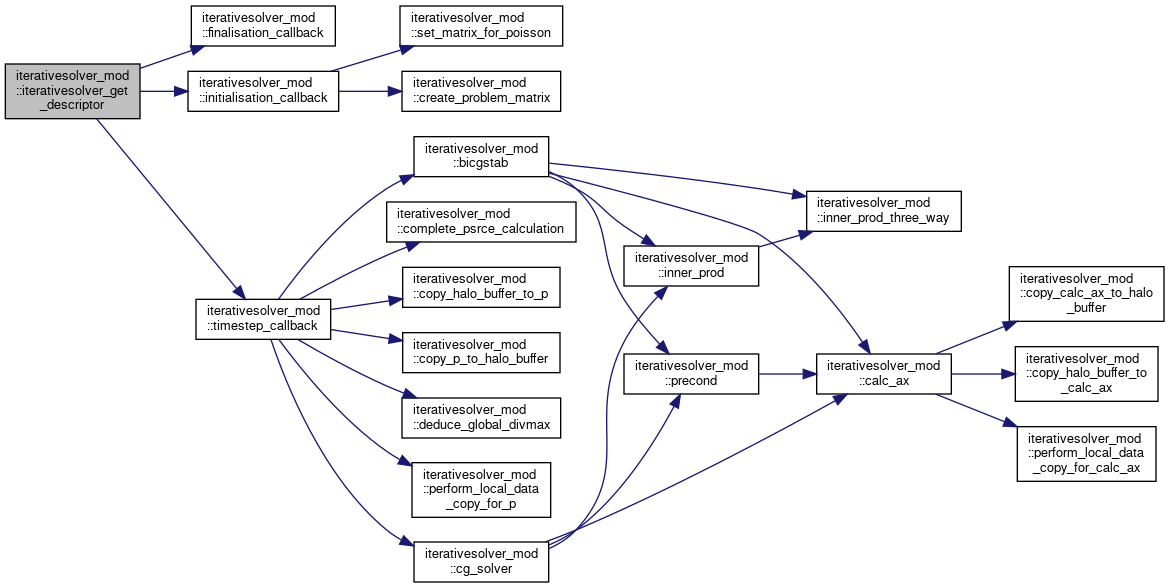

This is the iterative pressure solver and uses a Jacobi preconditioned BiCGStab which we implement here. More...

Data Types | |

| type | matrix_type |

| A helper type to abstract the concrete details of the matrix. More... | |

Functions/Subroutines | |

| type(component_descriptor_type) function, public | iterativesolver_get_descriptor () |

| Descriptor of the iterative solver component used by the registry. More... | |

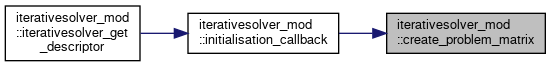

| subroutine | initialisation_callback (current_state) |

| Initialisation callback hook which will set up the halo swapping state and allocate some data. More... | |

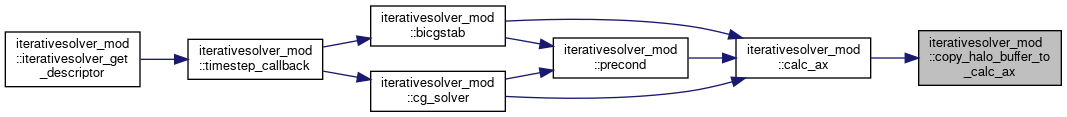

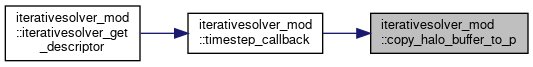

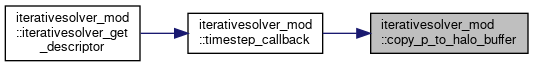

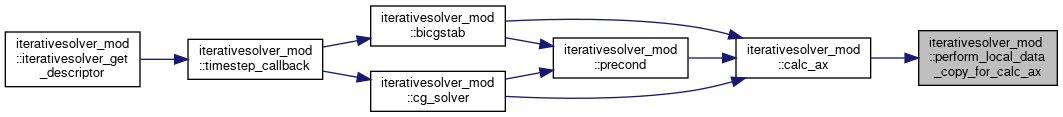

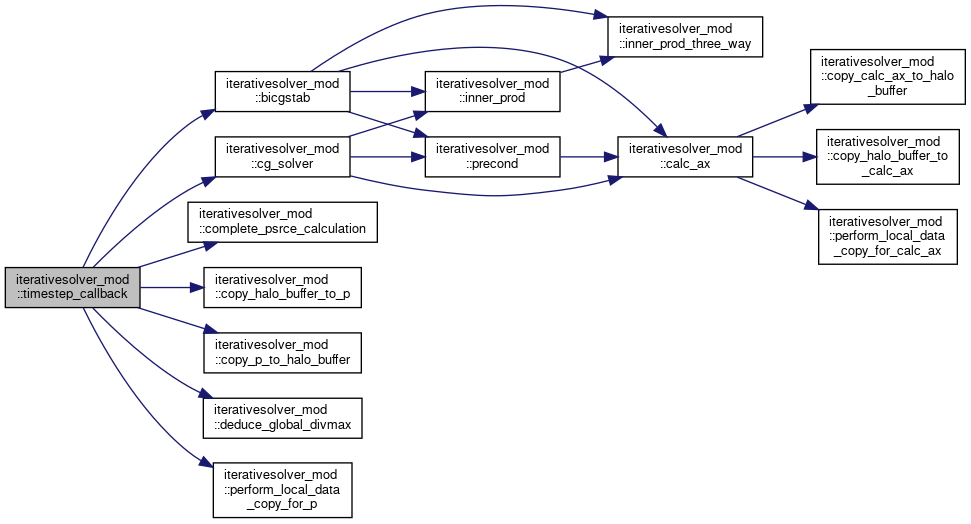

| subroutine | timestep_callback (current_state) |

| Timestep callback, this ignores all but the last column where it calls the solver. More... | |

| subroutine | finalisation_callback (current_state) |

| Called as MONC is shutting down and frees the halo swap state and deallocates local data. More... | |

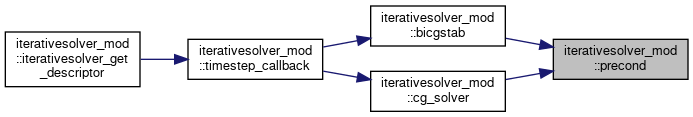

| subroutine | bicgstab (current_state, A, x, b, i_strt, i_end, j_strt, j_end, k_end) |

| Performs the BiCGStab KS method. More... | |

| subroutine | cg_solver (current_state, A, x, b, i_strt, i_end, j_strt, j_end, k_end) |

| Performs the preconditioned conjugate gradient method. More... | |

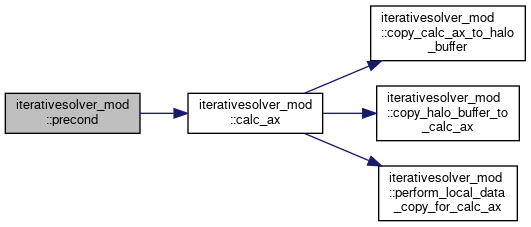

| subroutine | precond (current_state, A, s, r, preits) |

| Jacobi preconditioner. More... | |

| subroutine | calc_ax (current_state, A, x, Ax) |

| Calculates A * x. More... | |

| real(kind=default_precision) function | inner_prod (current_state, x, y, i_strt, i_end, j_strt, j_end, k_end) |

| Returns the global inner product of two vectors, ignoring the halo cells. More... | |

| real(kind=default_precision) function, dimension(3) | inner_prod_three_way (current_state, t, s, i_strt, i_end, j_strt, j_end, k_end) |

| Returns the global inner product of a pair of vectors, ignoring the halo cells for three separate pairs. This call is for optimisation to bunch up the comms in a BiCGStab solver per iteration. More... | |

| subroutine | set_matrix_for_poisson (grid_configuration, A, z_size) |

| Sets the values of the provided matrix to solve the poisson equation. More... | |

| subroutine | deduce_global_divmax (current_state) |

| Determines the global divmax which is written into the current state. More... | |

| subroutine | copy_p_to_halo_buffer (current_state, neighbour_description, dim, source_index, pid_location, current_page, source_data) |

| Copies the p field data to halo buffers for a specific process in a dimension and halo cell. More... | |

| subroutine | copy_calc_ax_to_halo_buffer (current_state, neighbour_description, dim, source_index, pid_location, current_page, source_data) |

| Copies the source field data to halo buffers for a specific process in a dimension and halo cell - for the calc_Ax halo swaps. More... | |

| subroutine | copy_halo_buffer_to_p (current_state, neighbour_description, dim, target_index, neighbour_location, current_page, source_data) |

| Copies the halo buffer to halo location for the p field. More... | |

| subroutine | copy_halo_buffer_to_calc_ax (current_state, neighbour_description, dim, target_index, neighbour_location, current_page, source_data) |

| Copies the halo buffer to halo location for the source field as required in the calc_Ax procedure. More... | |

| subroutine | perform_local_data_copy_for_p (current_state, halo_depth, involve_corners, source_data) |

| Does local data copying for P variable halo swap. More... | |

| subroutine | perform_local_data_copy_for_calc_ax (current_state, halo_depth, involve_corners, source_data) |

| Does a local data copy for halo swapping cells with wrap around (to maintain periodic boundary condition) More... | |

| type(matrix_type) function | create_problem_matrix (z_size) |

| Creates a problem matrix, allocating the required data based upon the column size. More... | |

| subroutine | complete_psrce_calculation (current_state, y_halo_size, x_halo_size) |

| Completes the psrce calculation by waiting on all outstanding psrce communications to complete and then combine the received values with the P field for U and V. More... | |

Variables | |

| real(kind=default_precision) | tolerance |

| real(kind=default_precision) | relaxation |

| Solving tollerance. More... | |

| integer | max_iterations |

| Maximum number of BiCGStab iterations. More... | |

| integer | preconditioner_iterations |

| Number of preconditioner iterations to perform per call. More... | |

| logical | symm_prob |

| real(kind=default_precision), parameter | tiny = 1.0e-16 |

| Minimum residual - if we go below this then something has gone wrong. More... | |

| type(halo_communication_type), save | halo_swap_state |

| The halo swap state as initialised by that module. More... | |

| real(kind=default_precision), dimension(:,:,:), allocatable | psource |

| real(kind=default_precision), dimension(:,:,:), allocatable | prev_p |

| Passed to BiCGStab as the RHS. More... | |

| logical | first_run =.true. |

| type(matrix_type) | a |

Detailed Description

This is the iterative pressure solver and uses a Jacobi preconditioned BiCGStab which we implement here.

Function/Subroutine Documentation

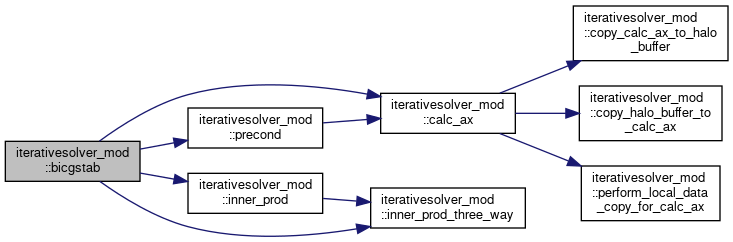

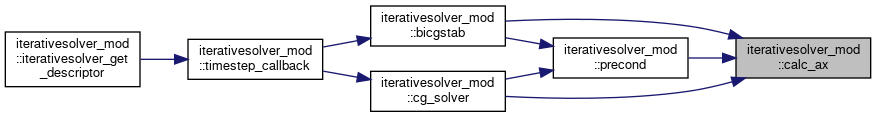

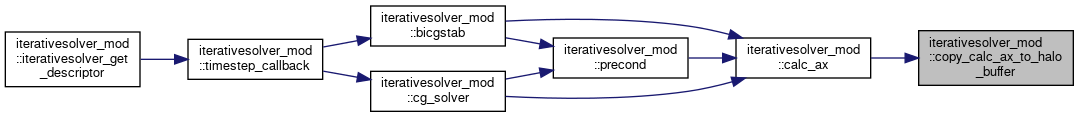

◆ bicgstab()

|

private |

Performs the BiCGStab KS method.

- Parameters

-

current_state The current model state A The matrix x The solution b The RHS

Definition at line 135 of file iterativesolver.F90.

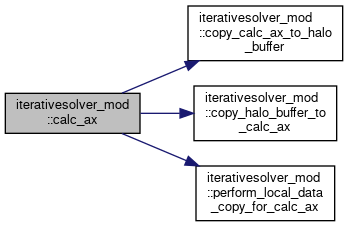

◆ calc_ax()

|

private |

Calculates A * x.

- Parameters

-

current_state The current model state A The matrix x Vector to multiply with Ax Result of A*x

Definition at line 378 of file iterativesolver.F90.

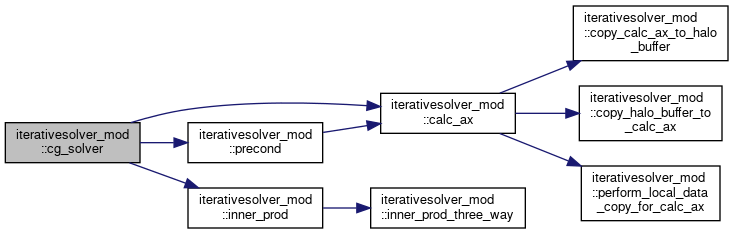

◆ cg_solver()

|

private |

Performs the preconditioned conjugate gradient method.

- Parameters

-

current_state The current model state A The matrix x The solution b The RHS

Definition at line 248 of file iterativesolver.F90.

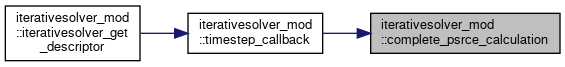

◆ complete_psrce_calculation()

|

private |

Completes the psrce calculation by waiting on all outstanding psrce communications to complete and then combine the received values with the P field for U and V.

- Parameters

-

current_state The current model state y_halo_size The halo size in the Y dimension x_halo_size The halo size in the X dimension

Definition at line 735 of file iterativesolver.F90.

◆ copy_calc_ax_to_halo_buffer()

|

private |

Copies the source field data to halo buffers for a specific process in a dimension and halo cell - for the calc_Ax halo swaps.

- Parameters

-

current_state The current model state neighbour_descriptions Description of the neighbour halo swapping status dim Dimension to copy from source_index The source index of the dimension we are reading from in the prognostic field pid_location Location of the neighbouring process in the local stored data structures current_page The current (next) buffer page to copy into source_data Optional source data which is read from

Definition at line 623 of file iterativesolver.F90.

◆ copy_halo_buffer_to_calc_ax()

|

private |

Copies the halo buffer to halo location for the source field as required in the calc_Ax procedure.

- Parameters

-

current_state The current model state neighbour_description The halo swapping description of the neighbour we are accessing the buffer of dim The dimension we receive for target_index The target index for the dimension we are receiving for neighbour_location The location in the local neighbour data stores of this neighbour current_page The current, next, halo swap page to read from (all previous have been read and copied already) source_data Optional source data which is written into

Definition at line 671 of file iterativesolver.F90.

◆ copy_halo_buffer_to_p()

|

private |

Copies the halo buffer to halo location for the p field.

- Parameters

-

current_state The current model state neighbour_description The halo swapping description of the neighbour we are accessing the buffer of dim The dimension we receive for target_index The target index for the dimension we are receiving for neighbour_location The location in the local neighbour data stores of this neighbour current_page The current, next, halo swap page to read from (all previous have been read and copied already) source_data Optional source data which is written into

Definition at line 649 of file iterativesolver.F90.

◆ copy_p_to_halo_buffer()

|

private |

Copies the p field data to halo buffers for a specific process in a dimension and halo cell.

- Parameters

-

current_state The current model state neighbour_descriptions Description of the neighbour halo swapping status dim Dimension to copy from source_index The source index of the dimension we are reading from in the prognostic field pid_location Location of the neighbouring process in the local stored data structures current_page The current (next) buffer page to copy into source_data Optional source data which is read from

Definition at line 601 of file iterativesolver.F90.

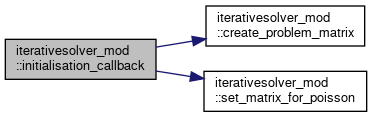

◆ create_problem_matrix()

|

private |

Creates a problem matrix, allocating the required data based upon the column size.

- Parameters

-

z_size Number of elements in the vertical column

- Returns

- The allocated matrix ready to be used

Definition at line 722 of file iterativesolver.F90.

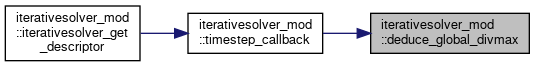

◆ deduce_global_divmax()

|

private |

Determines the global divmax which is written into the current state.

- Parameters

-

current_state The current model state

Definition at line 584 of file iterativesolver.F90.

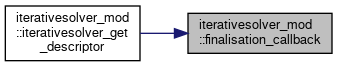

◆ finalisation_callback()

|

private |

Called as MONC is shutting down and frees the halo swap state and deallocates local data.

- Parameters

-

current_state The current model state

Definition at line 123 of file iterativesolver.F90.

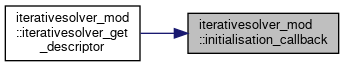

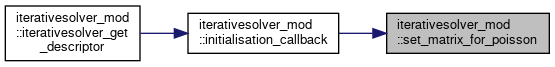

◆ initialisation_callback()

|

private |

Initialisation callback hook which will set up the halo swapping state and allocate some data.

- Parameters

-

current_state The current model state

Definition at line 57 of file iterativesolver.F90.

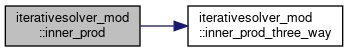

◆ inner_prod()

|

private |

Returns the global inner product of two vectors, ignoring the halo cells.

- Parameters

-

current_state The current model state x First vector @praam y Second vector

- Returns

- Global inner product of the two input vectors

Definition at line 478 of file iterativesolver.F90.

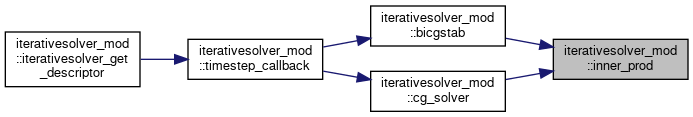

◆ inner_prod_three_way()

|

private |

Returns the global inner product of a pair of vectors, ignoring the halo cells for three separate pairs. This call is for optimisation to bunch up the comms in a BiCGStab solver per iteration.

- Parameters

-

current_state The current model state x First vector @praam y Second vector

- Returns

- Global inner product of the two input vectors

Definition at line 506 of file iterativesolver.F90.

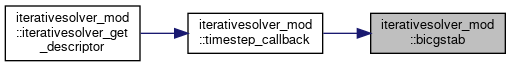

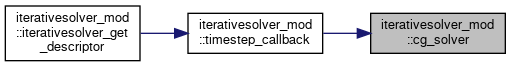

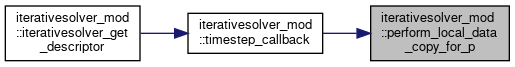

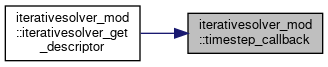

◆ iterativesolver_get_descriptor()

| type(component_descriptor_type) function, public iterativesolver_mod::iterativesolver_get_descriptor |

Descriptor of the iterative solver component used by the registry.

- Returns

- The iterative solver component descriptor

Definition at line 47 of file iterativesolver.F90.

◆ perform_local_data_copy_for_calc_ax()

|

private |

Does a local data copy for halo swapping cells with wrap around (to maintain periodic boundary condition)

- Parameters

-

current_state The current model state source_data Optional source data which is written into

Definition at line 705 of file iterativesolver.F90.

◆ perform_local_data_copy_for_p()

|

private |

Does local data copying for P variable halo swap.

- Parameters

-

current_state The current model state_mod source_data Optional source data which is written into

Definition at line 692 of file iterativesolver.F90.

◆ precond()

|

private |

Jacobi preconditioner.

- Parameters

-

current_state The current model state A The matrix s Written into as result of preconditioning r Input values to preconditioner preits Number of iterations of the preconditioner to perform per call

Definition at line 320 of file iterativesolver.F90.

◆ set_matrix_for_poisson()

|

private |

Sets the values of the provided matrix to solve the poisson equation.

- Parameters

-

grid_configuration Configuration of the vertical and horizontal grids A The matrix that the values are written into z_size Number of elements in a column

Definition at line 537 of file iterativesolver.F90.

◆ timestep_callback()

|

private |

Timestep callback, this ignores all but the last column where it calls the solver.

- Parameters

-

current_state The current model state

Definition at line 84 of file iterativesolver.F90.

Variable Documentation

◆ a

|

private |

Definition at line 40 of file iterativesolver.F90.

◆ first_run

|

private |

Definition at line 39 of file iterativesolver.F90.

◆ halo_swap_state

|

private |

The halo swap state as initialised by that module.

Definition at line 37 of file iterativesolver.F90.

◆ max_iterations

|

private |

Maximum number of BiCGStab iterations.

Definition at line 31 of file iterativesolver.F90.

◆ preconditioner_iterations

|

private |

Number of preconditioner iterations to perform per call.

Definition at line 31 of file iterativesolver.F90.

◆ prev_p

|

private |

Passed to BiCGStab as the RHS.

Definition at line 38 of file iterativesolver.F90.

◆ psource

|

private |

Definition at line 38 of file iterativesolver.F90.

◆ relaxation

|

private |

Solving tollerance.

Definition at line 30 of file iterativesolver.F90.

◆ symm_prob

|

private |

Definition at line 33 of file iterativesolver.F90.

◆ tiny

|

private |

Minimum residual - if we go below this then something has gone wrong.

Definition at line 35 of file iterativesolver.F90.

◆ tolerance

|

private |

Definition at line 30 of file iterativesolver.F90.